From what has been released thus far, AI companies and those integrating AI into their products can take some steps now to prepare for EU AI Act compliance

Just before midnight on December 8, European Union lawmakers agreed on a provisional deal on the EU AI Act. The act’s goal is to govern the use of artificial intelligence (AI) systems within EU-member countries, as well as regulate the region’s major AI-enabled systems such as ChatGPT.

In doing so, the EU has become the first major global body to regulate AI systems, potentially setting a standard for other regulatory bodies to follow — just as it did with privacy law through the General Data Protection Regulation (GDPR). The EU’s AI Act also will set a minimum standard for foundational models such as ChatGPT and general-purpose AI systems (GPAI) to follow before they are put on the market. Non-compliance with the rules can lead to fines ranging from €7.5 million (US$8.1 million) or 1.5% of gross revenue, up to €35 million, or 7% of global gross revenue.

The full text of the act itself will not be released until early 2024, and the act still requires full ratification from the European Parliament. In addition, Thierry Breton, the European Commissioner for the Internal Market of the EU, said the draft transparency and governance requirements should be published in about 12 months, with the final details going into effect in around 24 months. This means the act’s effective date has not been determined and could be years away.

Still, given what has been released from lawmakers thus far, there are some steps AI companies and those integrating AI into their products can take now to prepare for EU AI Act compliance.

The regulation’s coverage

While the act has not been released in its full form, EU lawmakers noted in a press release that the act “aims to ensure that fundamental rights, democracy, the rule of law and environmental sustainability are protected from high-risk AI, while boosting innovation and making Europe a leader in the field.” As such, while the act will ban some potential business uses for AI, many of the banned use cases are focused on individual rights.

These banned applications for AI include:

-

-

- Biometric categorization systems that use sensitive characteristics (g., race, sexual orientation, or political, religious or philosophical beliefs);

- Untargeted scraping of facial images from the internet or CCTV footage to create facial recognition databases;

- Emotion recognition in the workplace and educational institutions; and

- Social scoring that’s based on social behavior or personal characteristics.

-

There are some limited exceptions to these applications for law enforcement usage, but real-time biometric surveillance in public spaces is limited to protecting victims of certain crimes; prevention of genuine, present, or foreseeable threats, such as terrorist attacks; and searches for people suspected of the most serious crimes.

In a published statement, Commissioner Breton noted the EU will take a “two-tiered approach” to regulation, “with transparency requirements for all general-purpose AI models and stronger requirements for powerful models with systemic impacts across our EU Single Market.” For general AI usage, these requirements will include drawing up technical documentation, complying with EU copyright law and disseminating detailed summaries about the content used for training. EU citizens will also have a right to launch complaints about AI systems and receive explanations about decisions based on high-risk AI systems that might impact their rights.

For those high-impact foundational models, the increased regulatory requirements will include conducting model evaluations, assessing and mitigating systemic risks, conducting adversarial testing, reporting to the European Commission on serious incidents, ensuring cybersecurity protections, and reporting on the AI systems’ energy efficiency. Lawmakers added that “until harmonized EU standards are published, GPAIs with systemic risk may rely on codes of practice to comply with the regulation,” according to the Commission’s press release.

To the point of boosting innovation, however, lawmakers did note that they “wanted to ensure that businesses, especially SMEs [small and midsized enterprises], can develop AI solutions without undue pressure from industry giants controlling the value chain.” To do this, the act also will include provisions that promote regulatory sandboxes and real-world testing, providing an avenue to develop and train AI for regulatory compliance before it hits the market.

What it means for businesses

According to the Thomson Reuters Future of Professionals C-Suite Survey, 93% of professionals in the legal, tax & accounting, corporate, and government spaces believe AI needs regulation. However, respondents were split about who they felt should take the lead in regulating AI, with many in the outside tax & accounting firms and law firms in particular preferring individual professions to govern AI use rather than government regulators.

Some respondents did welcome regulation at multiple levels, however, with one noting: “I believe that AI will (and should) be regulated by both the government [and] by my legal profession. That said, inevitably it will need self-regulation by its providers and additional regulation by the other professions utilizing it as well.”

While the EU AI Act may not likely have immediate impact on the legal and tax professions — similar to the recent U.S. Executive Order on AI, which largely focused on the technology and government sectors — its passing does signal a willingness from lawmakers to tackle AI usage. Still, as a first step by any global regulatory body, some concern was voiced by business interests who may fear innovation being curtailed or potential copycat bills being passed.

“We have a deal, but at what cost?” Cecilia Bonefeld-Dahl, the Director General of DigitalEurope, told Reuters. “We fully supported a risk-based approach based on the uses of AI, not the technology itself, but the last-minute attempt to regulate foundation models has turned this on its head.”

Indeed, because the act does more than regulate use cases, focusing on the foundational systems themselves, it could mean that tech companies are likely to face an increased regulatory burden, including the technology providers that service professional services firms.

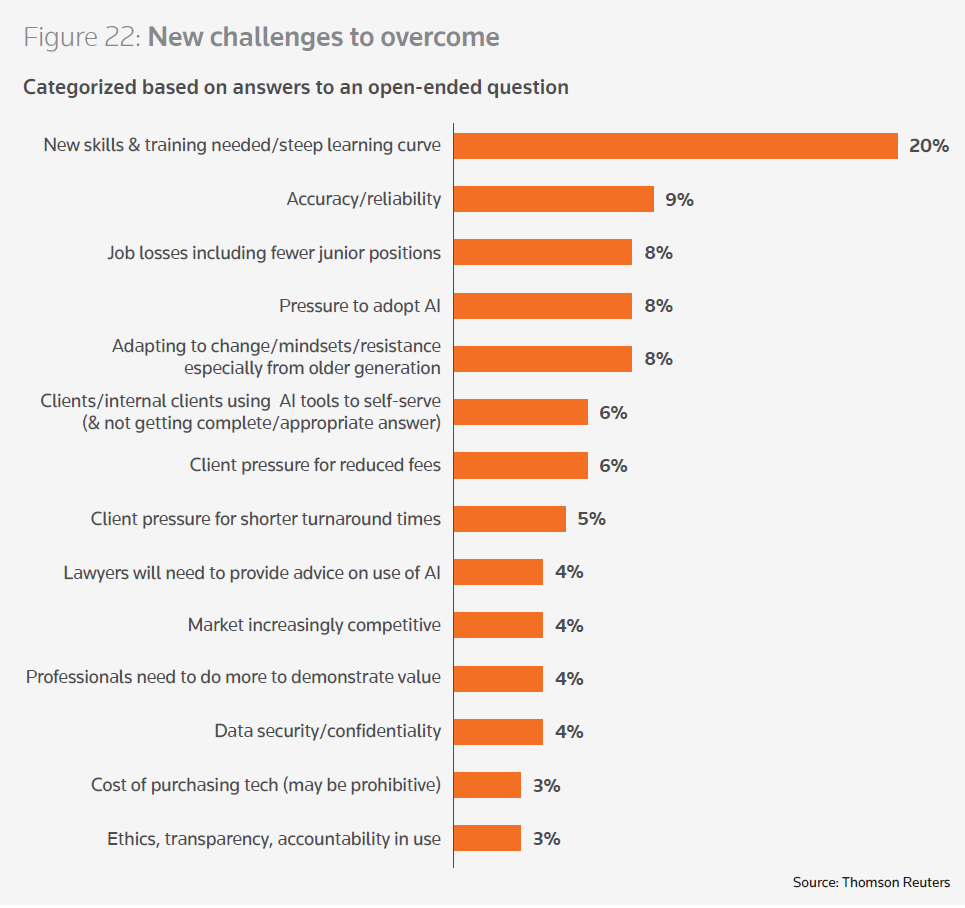

However, there is no reason to believe that these increased regulatory requirements will actually slow generative AI’s spread. When Future of Professionals respondents noted the top challenges that they believed AI needed to overcome before widespread adoption, the top answers included new skills and training; system accuracy and reliability; job losses from automation; and adapting to change — none of which are factors that will be heavily influenced by a new regulation.

Given generative AI’s potential for both new ways of working and efficiency in both internal and external work, the technology is likely here to stay, regardless of any regulation. As a result, both companies and professional services firms would be well-suited to tackle regulations such as the EU’s AI Act early, in order to continue reaping the benefits of the technology as it becomes a more mainstream part of business life.